Repeating a Matrix Multiplication Benchmark

I watched the Hennessy & Patterson's Turing award lecture recently:

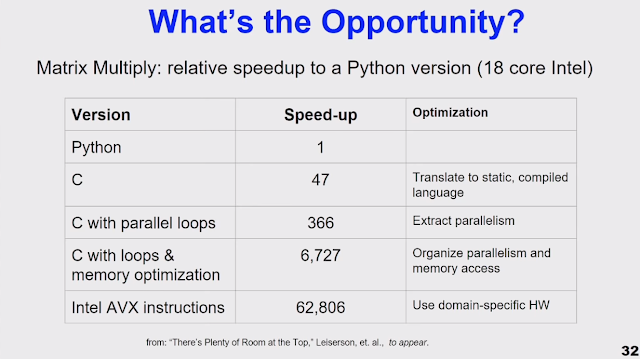

In it, there's a slide comparing the performance of various matrix multiplication implementations, using Python (presumably CPython) as a baseline and comparing that against various C implementations (I couldn't find the linked paper yet):

I expected the baseline speedup of switching from CPython to C to be higher and I also wanted to know what performance PyPy gets, so I did my own benchmarks. This is a problem that Python is completely unsuited for, so it should give very exaggerated results.

The usual disclaimers apply: All benchmarks are lies, benchmarking of synthetic workloads even more so. My implementation is really naive (though I did optimize it a little bit to help CPython), don't use any of this code for anything real. The benchmarks ran on my rather old Intel i5-3230M laptop under Ubuntu 17.10.

With that said, my results were as follows:

| Implementation | time | speedup over CPython | speedup over PyPy |

|---|---|---|---|

| CPython | 512.588 ± 2.362 s | 1 × | |

| PyPy | 8.167 ± 0.007 s | 62.761 ± 0.295 × | 1 × |

| 'naive' C | 2.164 ± 0.025 s | 236.817 ± 2.918 × | 3.773 ± 0.044 × |

| NumPy | 0.171 ± 0.002 s | 2992.286 ± 42.308 × | 47.678 ± 0.634 × |

This is running 1500x1500 matrix multiplications with (the same) random matrices. Every implementation is run 50 times in a fresh process. The results are averaged, the errors are bootstrapped 99% confidence intervals.

So indeed the speedup that I got of switching from CPython to C is quite a bit higher than 47x! PyPy is much better than CPython, but of course can't really compete against GCC. And then the real professionals (numpy/OpenBLAS) are in a whole 'nother league. The speedup of the AVX numbers in the slide above is even higher than my NumPy numbers, which I assume is the result of my old CPU with two cores, vs. the 18 core CPU with AVX support. Lesson confirmed: leave matrix multiplication to people who actually know what they are doing.

Comments